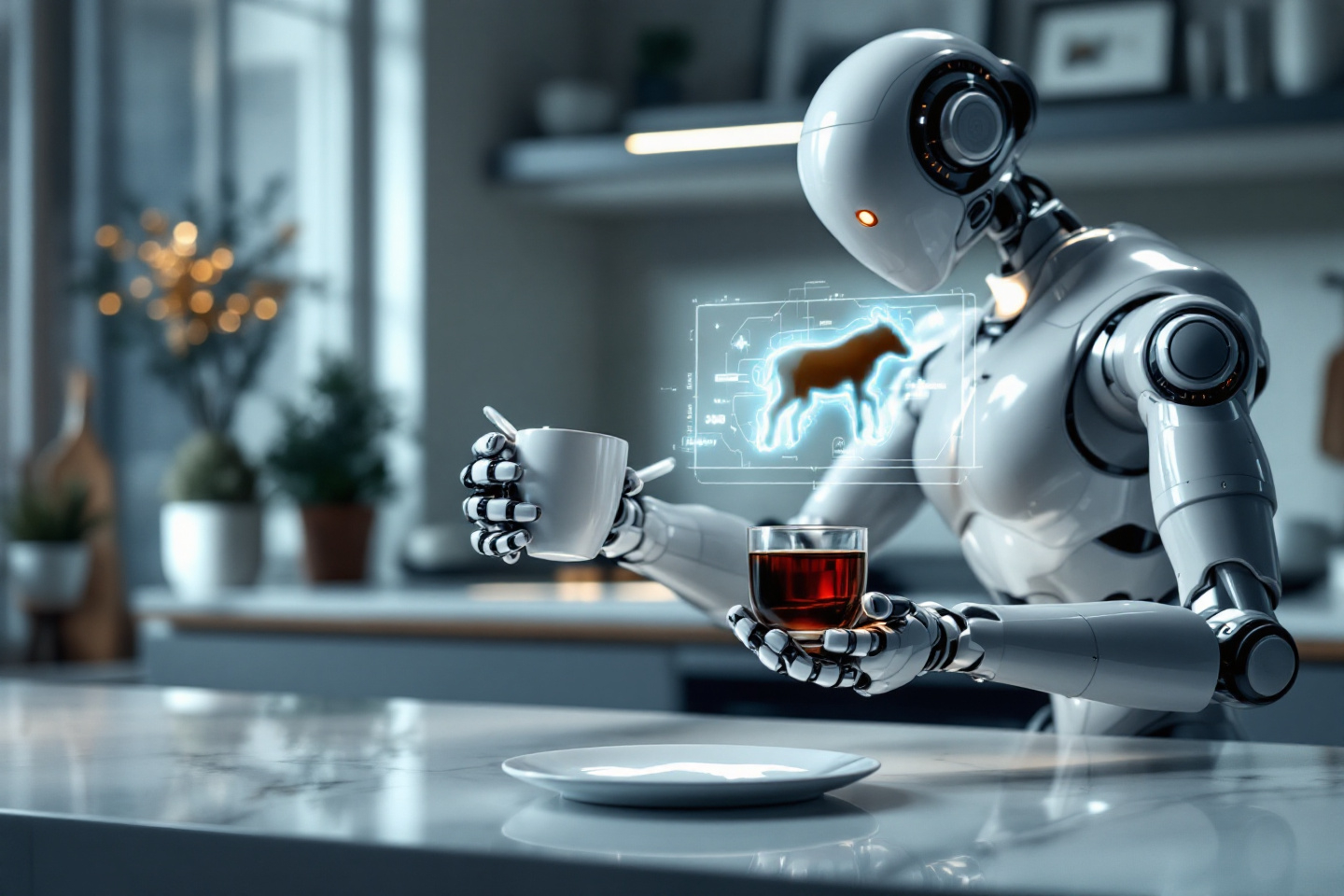

Imagine a robot that can make you coffee, decorate a plate with a drawing, and adapt to unexpected changes in its environment—all from a single verbal request. This isn’t science fiction anymore. It’s the promise of a new system called ELLMER, a groundbreaking fusion of AI, robotics, and real-time feedback that redefines what intelligent machines can do.

What is ELLMER?

ELLMER (Embodied Large Language Model-Enabled Robot) is a novel robotic framework that merges GPT-4 with real-time sensorimotor feedback—specifically, vision and force sensing. By embedding a large language model (LLM) like GPT-4 inside a robotic system, ELLMER enables robots to perform long-horizon, complex tasks in unpredictable environments. This is a step toward human-level general-purpose robotic intelligence.

Why It Matters: Embodied Cognition for Robots

Traditional AI systems process language or perform tasks in isolated digital environments. ELLMER brings cognition into the real world, following the theory of embodied cognition—the idea that intelligence is grounded in interaction with the physical environment.

This means robots can now:

- Interpret abstract commands

- Break them into actionable steps

- Use real-time sensory input (vision and force)

- Adapt dynamically to changes like object movement or unknown drawer mechanisms

How It Works

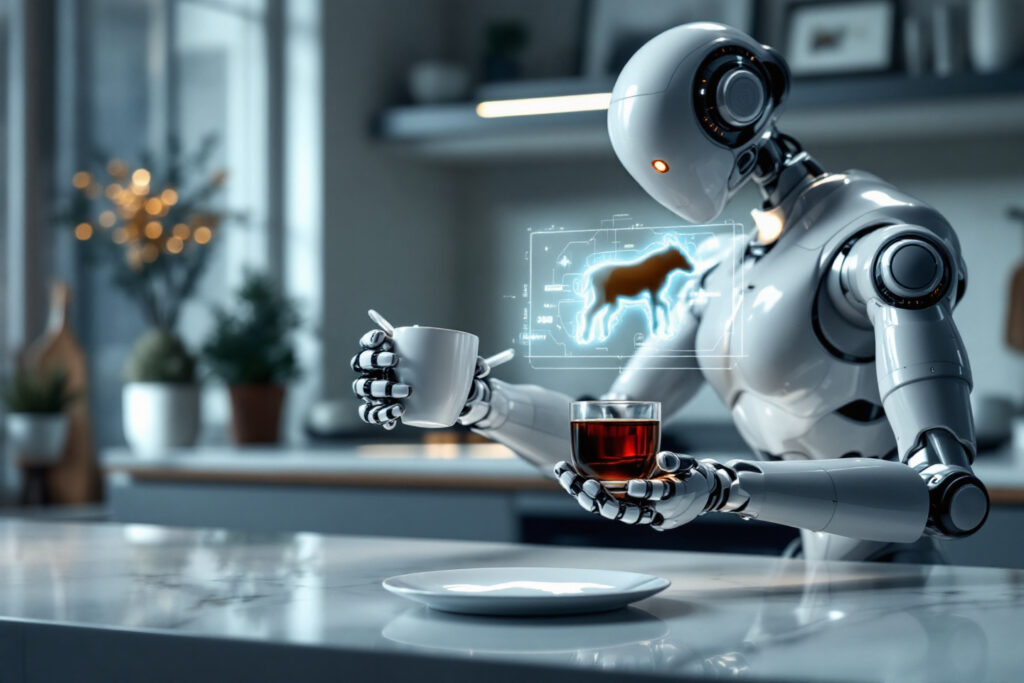

At its core, ELLMER takes a voice or text command and uses GPT-4 to generate a high-level plan. But what makes it truly advanced is:

- Vision feedback using a 3D voxel map and Azure Kinect to detect and locate objects

- Force feedback from sensors that help control pouring, gripping, and drawing pressure

- Retrieval-Augmented Generation (RAG) to pull relevant code snippets from a curated knowledge base

- Robotic execution via a Kinova Gen3 robotic arm with real-time updates at 40Hz

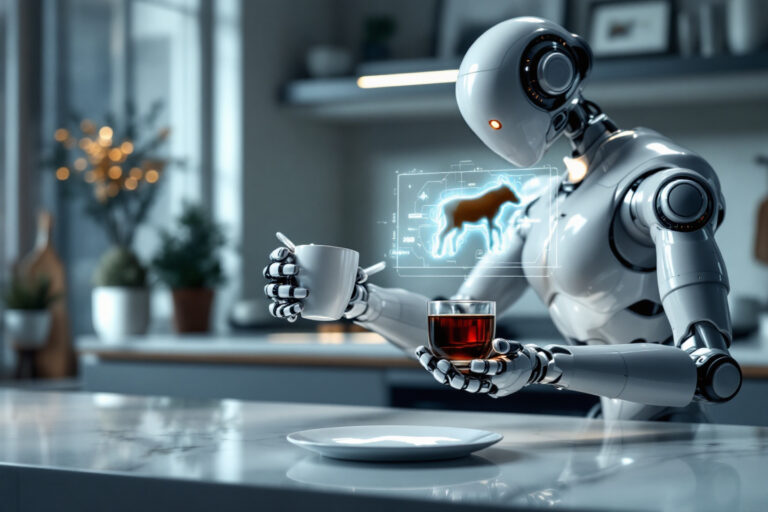

For example, in a demo task, ELLMER responded to the prompt:

“I’m tired, with friends due for cake soon. Can you make me a hot beverage, and decorate the plate with a random animal of your choice?”

It found a cup, brewed coffee, picked a drawing tool, generated an animal silhouette via DALL·E, and drew it on a plate—all autonomously.

Key Innovations

1. Real-World Language Understanding

GPT-4 doesn’t just generate code—it thinks contextually. ELLMER decomposes vague commands into subtasks like “find mug,” “open drawer,” “pour water,” etc., adapting on the fly when, say, the mug is moved mid-task.

2. RAG for Robotic Intelligence

RAG allows ELLMER to dynamically search its task-specific database for pre-written motion code. This boosts performance and ensures the robot retrieves only relevant, validated instructions without having to “relearn” known movements.

3. Force-Controlled Motion

Pouring coffee or drawing with a pen requires tactile precision. ELLMER uses six-axis force sensors to fine-tune pressure and grip, achieving accuracy even when vision is partially blocked—like pouring into a moving cup.

4. Artistic Abilities

Using DALL·E, the robot can generate outlines from prompts like “random bird” and then draw them with consistent pen pressure. This opens doors to creative applications like cake decoration or personalized gifts.

Real-World Applications

ELLMER has implications far beyond coffee-making:

- Assistive care robots that understand and respond to human needs

- Manufacturing where flexibility and adaptation are key

- Hospitality and food service with customized, on-demand tasks

- Creative tasks like drawing, design, or art replication

What’s Next?

The researchers acknowledge current limitations like object misidentification in cluttered scenes and slower task-switching. But with continued development—including better computer vision, tactile sensing, and learning-from-demonstration—ELLMER could become the foundation for the next generation of autonomous, intelligent machines.

Final Thoughts

ELLMER isn’t just a robot that makes coffee—it’s a blueprint for embodied AI systems that can think, adapt, and act. By combining GPT-4’s cognitive power with real-time sensory data and a growing knowledge base, it marks a leap toward robotic assistants that can truly collaborate with humans in dynamic, real-world settings.

Check out the cool NewsWade YouTube video about this article!

Article derived from: Mon-Williams, R., Li, G., Long, R. et al. Embodied large language models enable robots to complete complex tasks in unpredictable environments. Nat Mach Intell 7, 592–601 (2025). https://doi.org/10.1038/s42256-025-01005-x